- 15 Top Job Description Generators in 2026 (AI Included) - January 12, 2026

- How to Use Microsoft Word’s Readability Feature: 3 Easy Tips - March 14, 2025

- 3 Free Job Description Templates for Google Docs - February 27, 2025

Words matter, especially in job descriptions. And exclusionary words keep diverse talent from applying. Finding these words on your own is tricky and it can also take up a lot of time. So, how do you resolve this?

One of the best ways to do it is by using a job description bias tool. Here are 4 tools to save you time (and remove the human error element):

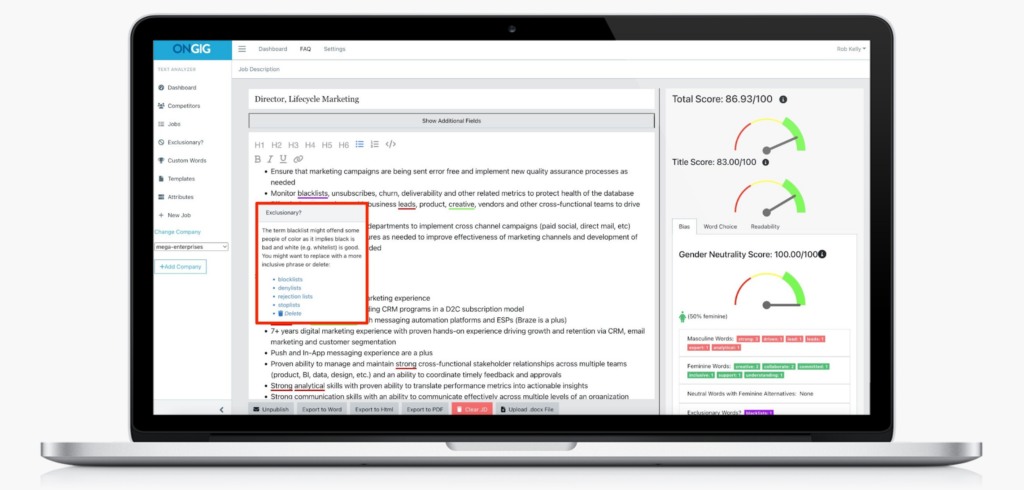

1. Ongig Job Description Bias Tool

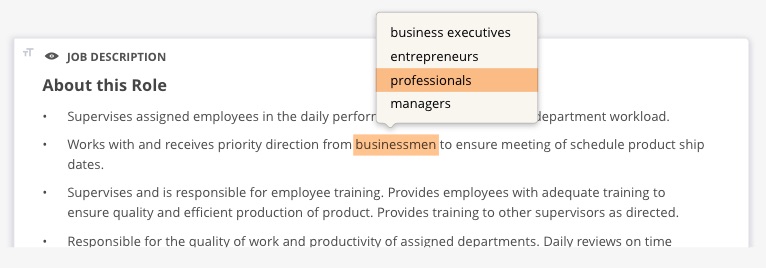

Ongig flags biased words in your JDs based on gender, race, age, disability, mental health, and more. And the software also makes it easy for you to swap biased words with more inclusive ones.

Here are some examples of words this job description bias tool might find in your JDs:

- Gender-coded words: strong, objectives, principles, leader, and competitive might deter women from applying to your job ads.

- Racial Bias: a cakewalk, blacklist, native English speaker, spirit animal, and brown bag might keep candidates from Black, Indigenous, and People of Color (BIPOC) communities from applying.

- Age Bias: recent graduates only, the elderly, and digital native might deter older people from applying to your roles.

These are just a few examples of biased language that creeps into your job descriptions (sometimes unintentionally). You’ll find loads of others in Ongig’s Inclusive Language List for Job Ads. And that’s just a sampling of the 10,000+ words flagged automatically when you use the software.

Outside of scanning for biased words, Ongig also has built-in job title suggestions, JD workflow settings, an AI-based “missing section” finder, readability tips, a complex words finder, custom JD templates, and more.

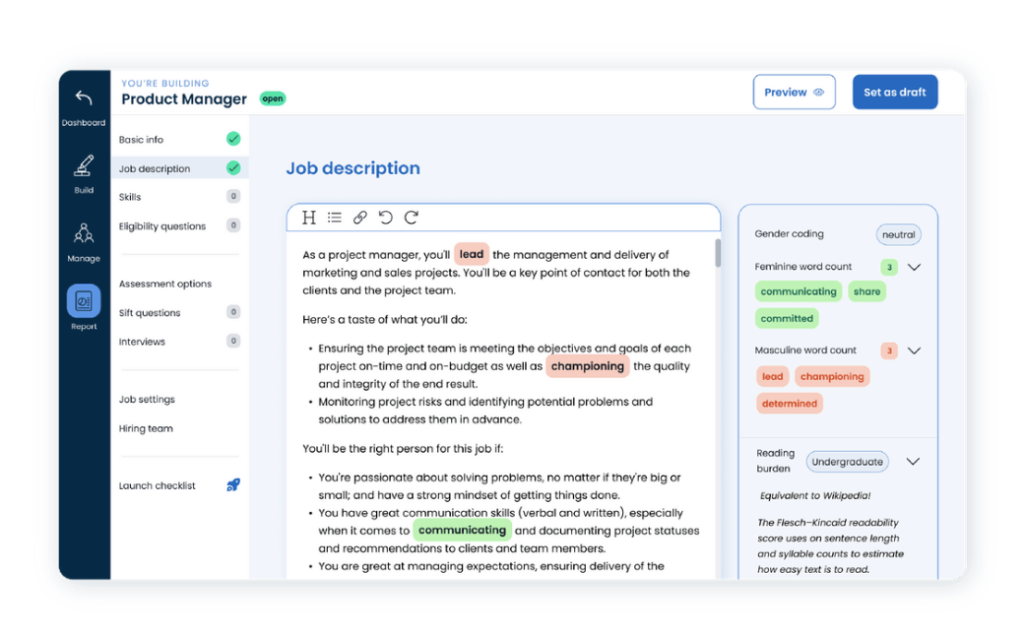

2. Applied Job Description Bias Tool

Applied is an applicant tracking system (ATS) with a focus on diversity, equality, and inclusion (DEI) in hiring. Its job description bias tool checks your JDs for gender-coded words because women are less likely to apply for a job with male-coded language.

Applied also:

- Checks the length of your job requirements. Long lists of requirements prevent more women from applying because studies show women usually only apply to jobs when they know they’ll meet 100% of the requirements.

- Analyzes your JDs for ease of reading. The tool uses Flesch reading scale to tell you how easy (or difficult) it is to read.

- Hosts regular DEI events.

- Has a job board for curated DEI jobs.

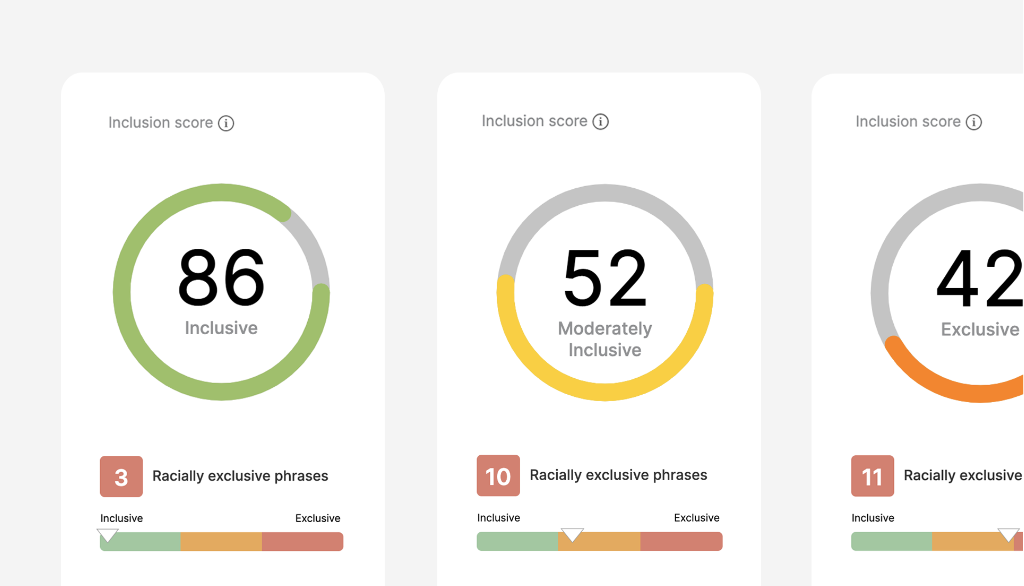

3. UInclude Job Description Bias Tool

UInclude’s job description bias tool identifies gender-based and racially exclusive phrases in your JDs. It also gives you more inclusive word replacements.

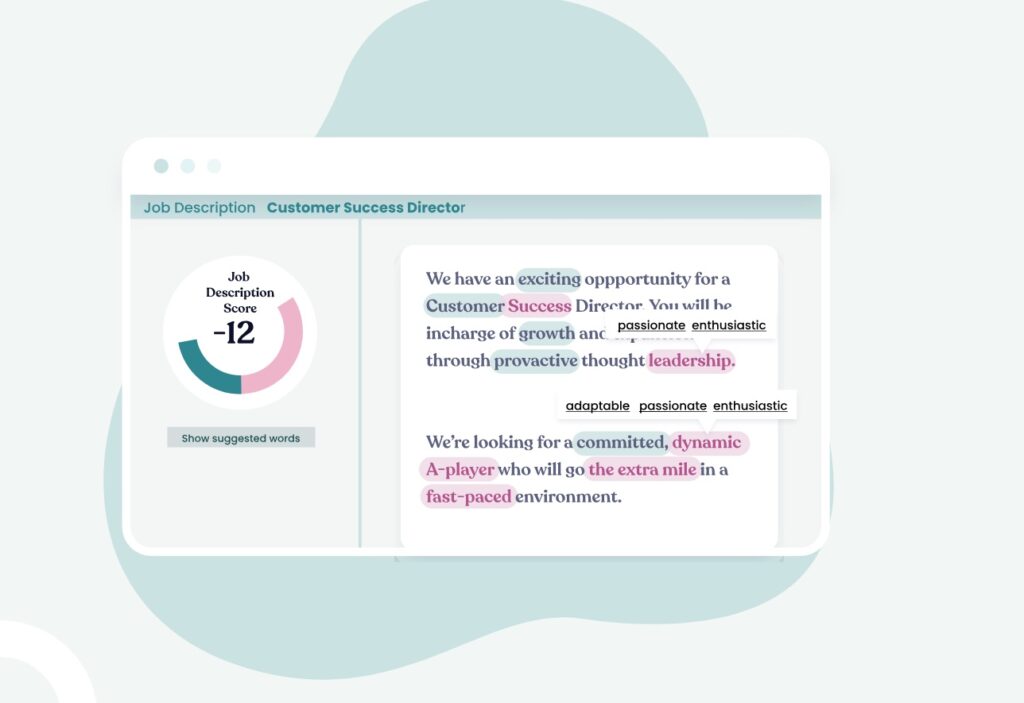

A unique feature of this tool is it gives you a performance prediction score or an “inclusion score.” This score tells you the inclusion level of your job ad and how it might appeal to underrepresented candidates. It also predicts that any JDs with a score of over 85 will have a higher rate of applicants and a lower cost per application.

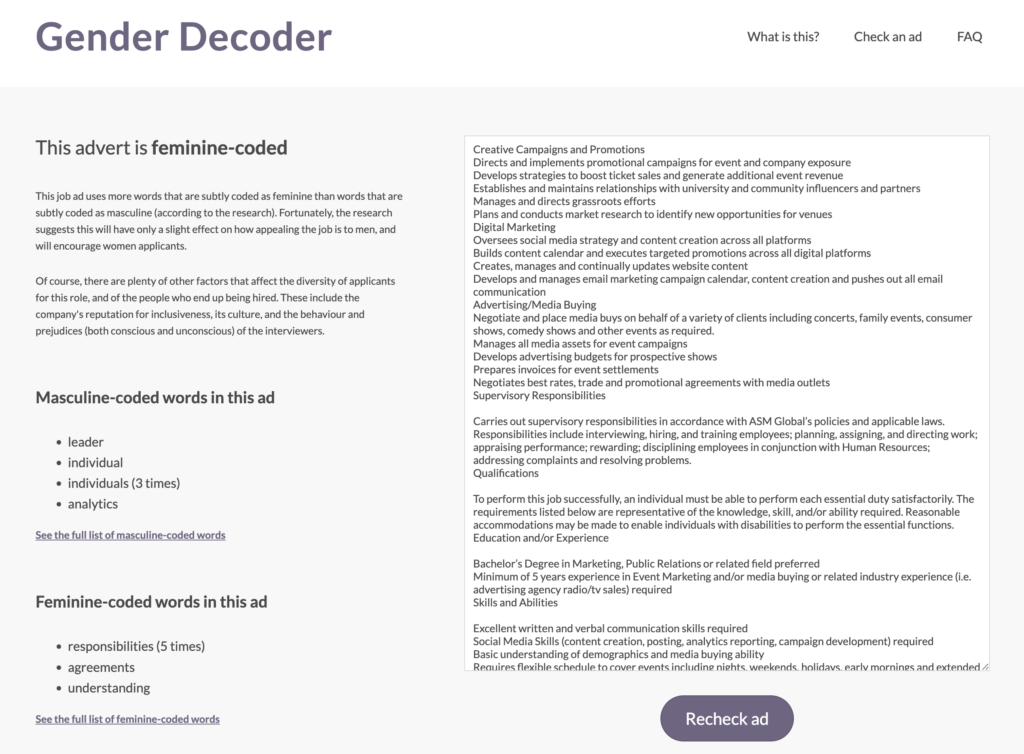

4. Gender Decoder Job Description Bias Tool

Gender decoder is a free job description bias tool focused on gender bias. It finds subtle gender-coded language in your JDs. It also tells you if these words are masculine or feminine coded words. But it does not offer more gender-neutral replacements.

When you copy and paste your JD into the blank box and click “check this ad,” it automatically populates a list on the left grouped into male and female coded words. But, the downside is it doesn’t underline the comments, so you’ll have to search for them manually.

Note: Gender Decoder also allows you to make a copy (as stated in their license) as long as you include the copyright and permission notice.

5. Clovers AI Job Description Bias Tool

Clovers’ job description bias tool is designed to reduce bias in the hiring process. Like many of the JD tools on our list, theirs finds gendered and offensive language in the text of your job description. It offers neutral and inclusive replacements for problematic words and phrases, so you can ensure that your job description is appealing to qualified candidates of all backgrounds. Their tool also scores your job description as a whole, so you and your team can build your JD-writing skills.

Clovers’ robust job description bias search function finds words and phrases that may be offensive to LGBTQ+ folks, people of color, disabled people, veterans, women, and more. We like that they conduct extensive research, in partnership with professors at Duke University, on inclusive hiring to ensure their tools stay up-to-date with language changes. Language has become more inclusive in recent years, so it is critical that the tools we rely on to check for bias work to stay current.

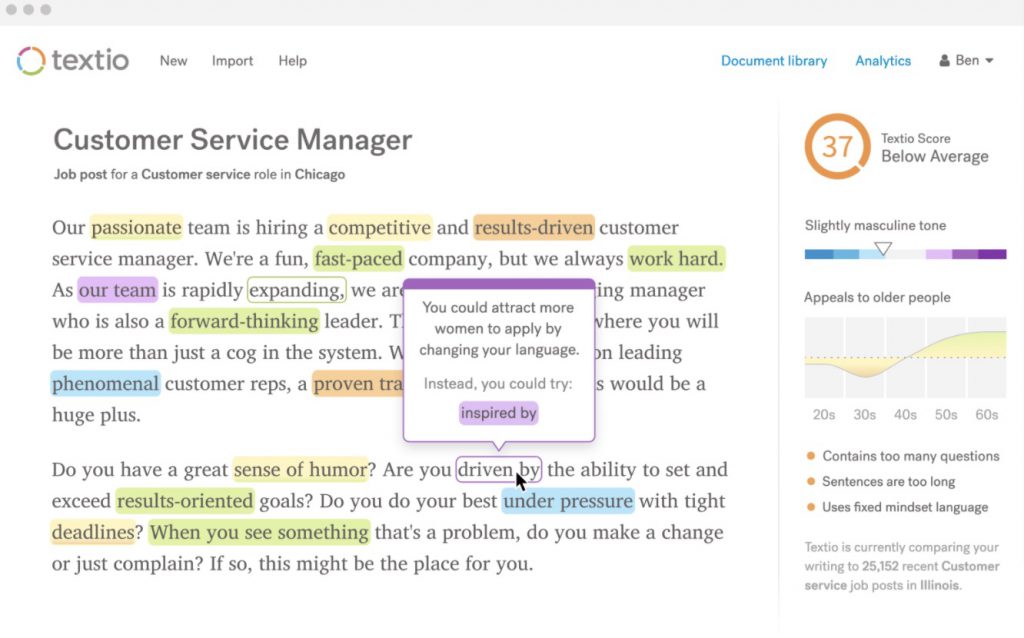

6. Textio Job Description Bias Tool

Textio creates inclusive human resources tools. Their job description bias tool is Textio Loop, which checks the text of your job descriptions for gendered or otherwise problematic language. Textio Loop scores your job descriptions so you can evaluate their inclusiveness before pressing publish. It pinpoints which biased language and recommends neutral, inclusive substitutions. Interestingly, their tool also allows you to check how well your job description will appeal to someone of a certain group. We love how it tracks your job descriptions over time, so you can see how your inclusivity is improving and take note of where you have room for improvement.

7. JobWriter Job Description Bias Tool

JobWriter’s job description bias tool provides inclusive, AI-generated job description templates. It also offers an evaluator function, so you can check your existing job descriptions for biased, gendered, and offensive language. We like its culture-fit features and its robust JD-scanning capabilities.

Unlike some of the other tools on our list, JobWriter is available in many languages, so you can create inclusive job descriptions for international candidates. JobWriter integrates with most existing HRISs, so you can add a job description tool to your repertoire without overcomplicating your HR workflow.

How Hiring Best Practices Are Changing in 2024

One reason we recommend working with a job description bias tool is that the HR landscape is constantly changing. As public sentiment changes, HR teams must also update their recruitment process to reflect the needs and wishes of diverse candidates and job seekers. A job description bias tool is a supportive tool that can help you recognize and address unconscious biases in yourself or your team and create job listings that truly reflect your diverse values. Here are a few changes to hiring best practices that we are seeing in 2024:

-

Pay transparency. Candidate advocacy has resulted in several states (such as Colorado and Connecticut) and localities (like New York City) passing laws that require all employers to provide transparent information about pay or salary to applicants. These laws vary greatly, but the general idea is that pay transparency helps to address gender and racial pay gaps, thereby creating a more diverse candidate pool. Pay transparency laws are popular with job seekers. According to research from SHRM, 82% of candidates are more likely to apply to a job with a pay range in the job listing. Tools such as OnGig’s Text Analyzer are also helping employers to remove other language that contains age-related bias from their job descriptions.

-

Rethinking ‘years of experience’. This common feature of job posts is changing. For years, companies have listed required or preferred years of experience that were inflated beyond what they realistically expected, discouraging younger and less-qualified applicants to enter the recruitment process. Some employers also offer a range of acceptable years of experience, which may discriminate against older people. The Age Discrimination Act prevents employers from discriminating against candidates and employees older than 40. For these reasons, many employers are switching to listing only a true minimum number of years of experience only in their job posts.

-

Artificial intelligence and machine learning are increasingly common in recruiting. AI is quickly growing in the human resources sector, with affordable AI-powered software within reach even for small businesses. While we believe in AI and even use it in our Text Analyzer tool, we also know the importance of keeping AI focused on DEI, transparency, and social responsibility. AI programs that incorporate the unconscious biases of their creators can have dangerous and discriminatory results. We expect that this year will bring increased scrutiny of these popular tools, both from industry and from government regulators.

Why I Wrote This:

Fair, effective, and inclusive job descriptions are critical for a diverse talent pipeline. Improve your hiring process with Onnig. Our job description bias tool is the most robust, flexible, and effective on the market. Contact us today for a demo and see why HR departments across many industries trust us to make their job postings more inclusive.